If you are a newbie in data engineering or business owner and are interested in exploring real-world data engineering projects, check out the popular Programming Languages for Data Engineering and tools:

Python

Python is a high-level, object-oriented programming language that has gained significant traction in the field of data engineering. Its appeal lies in its simplicity and versatility, supported by a vast array of libraries and frameworks. Python excels in data processing, transformation, and visualization, making it an excellent choice for various data engineering tasks. It is commonly used to develop ETL (Extract, Transform, Load) pipelines and can seamlessly integrate with Big Data technologies like Hadoop, Hive, and Impala. Notable frameworks that support Python include Apache Airflow, Apache Spark, and Pandas.

SQL

SQL (Structured Query Language) is an essential skill for data engineers, serving as the standard language for managing and manipulating data within relational databases. It allows users to create and modify database schemas, query and analyze data, and perform transformations through ETL processes. SQL is powerful and widely utilized, capable of handling large datasets while integrating smoothly with other data technologies.

Scala

Scala has emerged as a favored programming language in the realm of data engineering due to its functional programming capabilities and compatibility with Java. Its concise syntax enables developers to construct complex data processing pipelines efficiently and manage large datasets effectively across distributed systems. Scala is particularly well-suited for big data applications, with frameworks like Apache Spark and Apache Flink providing robust support for its use.

These programming languages—Python, SQL, and Scala—are pivotal in the field of data engineering, each offering unique strengths that facilitate the development of efficient data solutions. The global market for data engineering services and big data is expected to grow significantly, reaching $87.37 billion by 2025, up from $39.50 billion in 2020, reflecting a compound annual growth rate (CAGR) of 17.6%. As investments in data teams and infrastructure continue to rise, the variety and sophistication of data engineering tools have also expanded.

Data engineering is a specialized field that heavily relies on various tools to streamline and automate the creation of data pipelines. Selecting the right tools is crucial for enabling swift and reliable business decision-making. This article presents a carefully curated list of over 25 data engineering tools, highlighting their essential features across different layers of data engineering infrastructure.

List of top data engineering tools

- 1. Amazon Kinesis

- 2. Azure Event Hubs

- 3. Google Cloud Pub/Sub

- 4. Apache Kafka

- 5. Apache Flume

- 6. AWS Glue

- 7. Azure Stream Analytics

- 8. Google Cloud Dataflow

- 9. Apache Flink

- 10. Apache Spark

- 11. Apache Storm

- 12. Amazon Redshift

- 13. BigQuery

- 14. Azure Data Lake Storage

- 15. Apache Hadoop

- 16. Cassandra

- 17. Apache Hive

- 18. Snowflake

- 19. AWS Glue Data Catalog

- 20. Azure Data Catalog

- 21. GCP Data Catalog

- 22. Apache Atlas

- 23. Power BI

- 24. Looker

- 25. Tableau

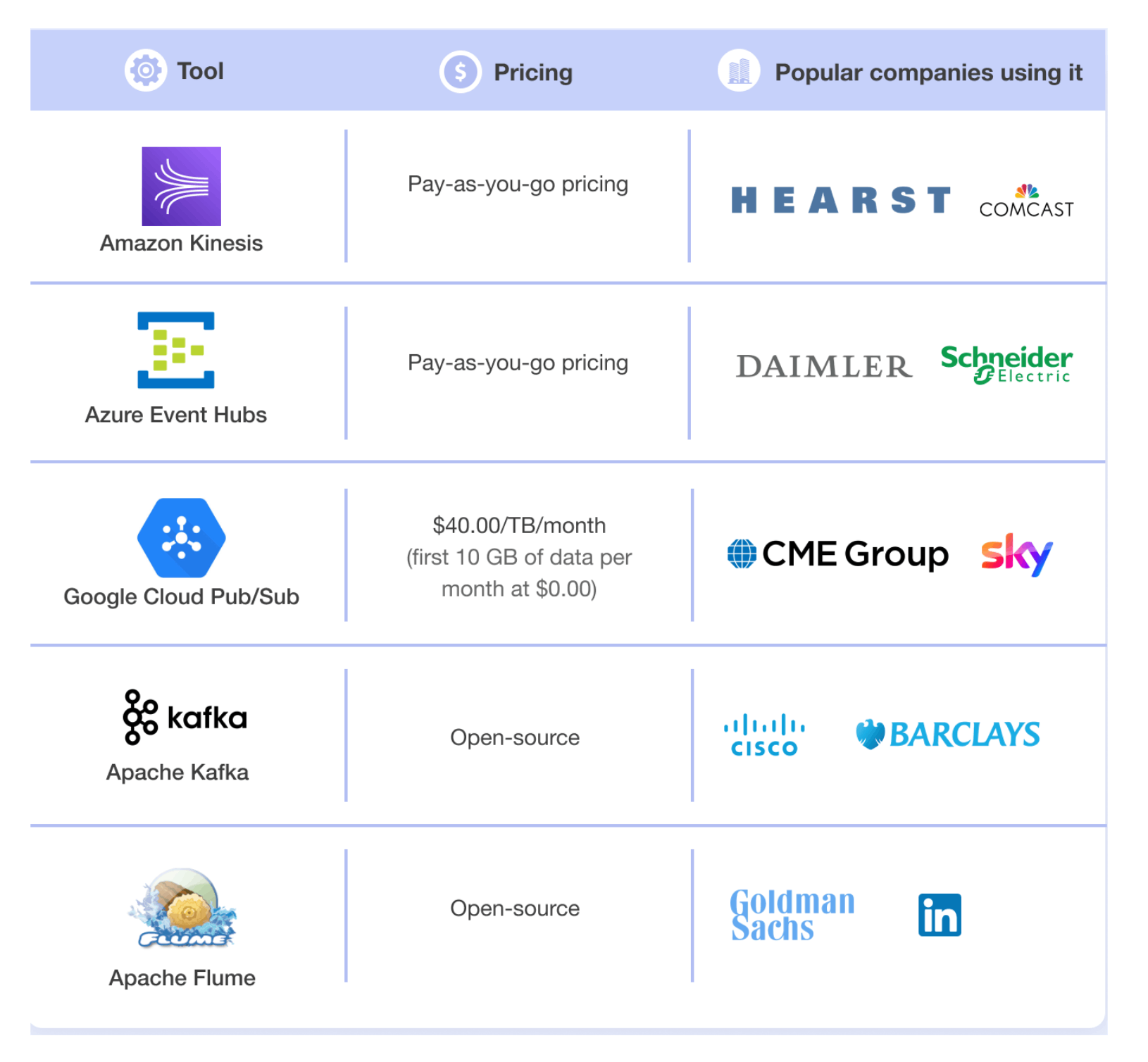

1. Amazon Kinesis

Amazon Kinesis is a fully managed service that facilitates real-time data streaming, enabling users to collect and analyze data from various sources, including application logs and IoT devices. Key components include Kinesis Data Streams for continuous ingestion, Kinesis Video Streams for video data, and Kinesis Data Firehose for delivering data to storage solutions.

2. Azure Event Hubs

Azure Event Hubs is a robust event ingestion and processing service capable of collecting and processing millions of events per second with low latency and high reliability. It supports real-time analytics for various applications, including anomaly detection and application logging, while allowing data to be stored and transformed using different batching or storage adapters. This service acts as a central hub for event streams, enabling businesses to respond swiftly to data-driven insights.

3. Google Cloud Pub/Sub

Google Cloud Pub/Sub is a fully managed messaging and ingestion service that facilitates real-time communication between independent applications and services. It enables seamless data flow for streaming analytics, event-driven architectures, and data integration pipelines, making it an essential tool for ingesting and distributing data efficiently across systems.

4. Apache Kafka

Apache Kafka is an open-source, distributed stream-processing platform renowned for its high performance, low latency, and fault tolerance. It is widely used by organizations to ingest, process, store, and analyze large volumes of data, supporting diverse use cases such as building efficient data pipelines, streaming analytics, and integrating data from multiple sources.

5. Apache Flume

Apache Flume is an open-source service designed for the efficient collection, aggregation, and transportation of large amounts of streaming event or log data. It can gather data from various sources and transport it to a centralized data store in a distributed manner, making it suitable not only for log data aggregation but also for handling unstructured event data from sources like social media or network traffic.

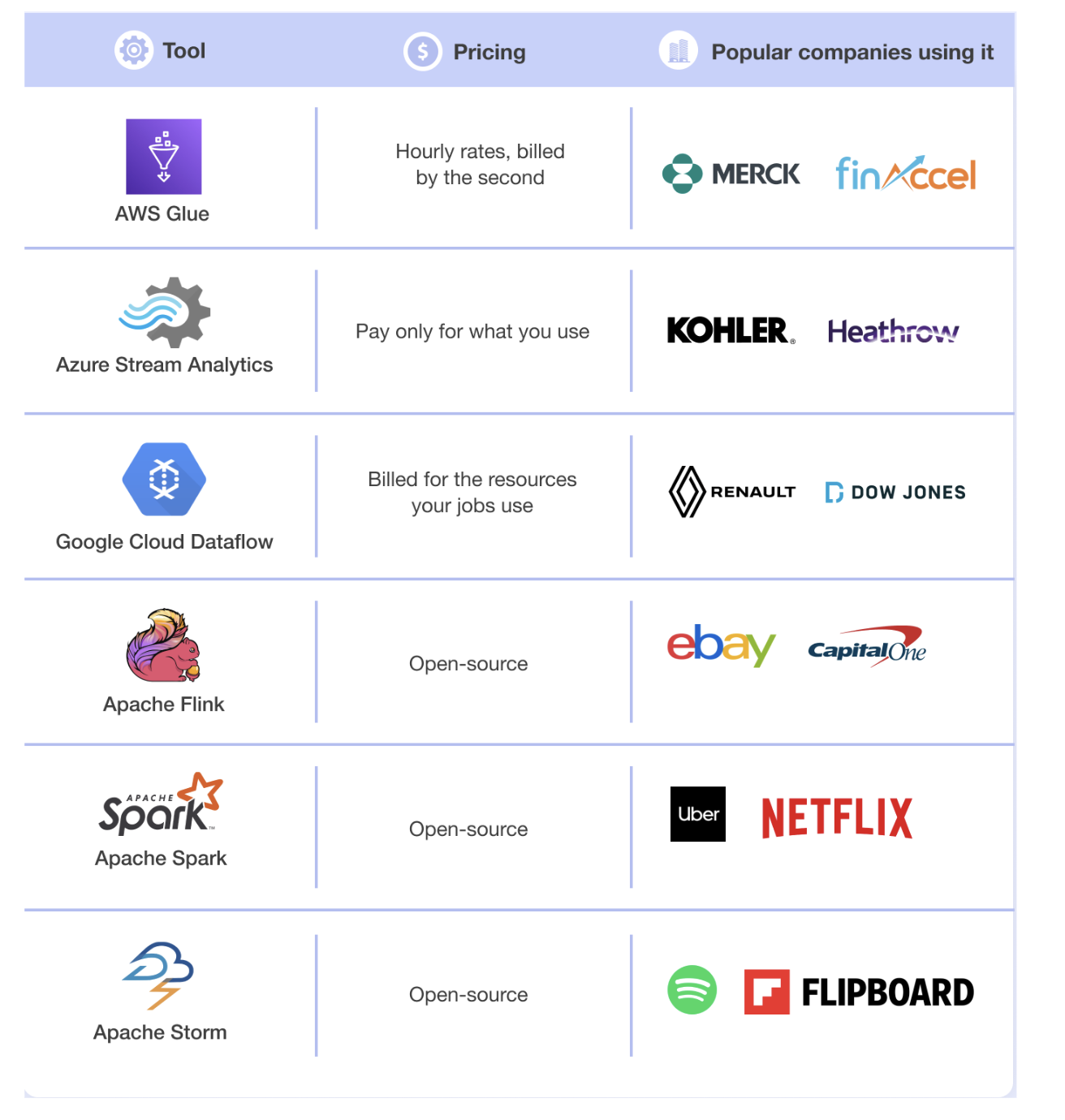

6. AWS Glue

AWS Glue is a serverless data integration service that simplifies the discovery, preparation, and transformation of data from multiple sources for analytics, machine learning, and application development. It allows users to quickly gain insights by automating ETL (Extract, Transform, Load) processes and managing data catalogs efficiently.

7. Azure Stream Analytics

Azure Stream Analytics is a fully managed service that processes millions of events per second with ultra-low latency. It analyzes data from various sources, such as sensors and applications, making it ideal for scenarios like anomaly detection and predictive maintenance.

8. Google Cloud Dataflow

Google Cloud Dataflow is a fully managed data processing service that unifies stream and batch processing with low latency. It supports use cases such as stream analytics and log data processing while offering real-time AI capabilities for advanced analytics solutions.

9. Apache Flink

Apache Flink is a distributed processing engine designed for stateful computations over both bounded and unbounded datasets. It delivers high throughput and low latency, making it suitable for applications like stream analytics, ETL processes, and data pipelines.

10. Apache Spark

Apache Spark is a distributed computing engine renowned for large-scale data processing and analytics. Its speed and versatility allow users to perform various operations on data at scale, including data engineering, data science, and machine learning tasks.

11. Apache Storm

Apache Storm is a distributed real-time computing engine designed for processing unbounded data streams with high throughput and low latency. Its parallel processing capabilities make it ideal for applications such as real-time analytics, online machine learning, and ETL tasks, allowing businesses to gain insights from data as it arrives.

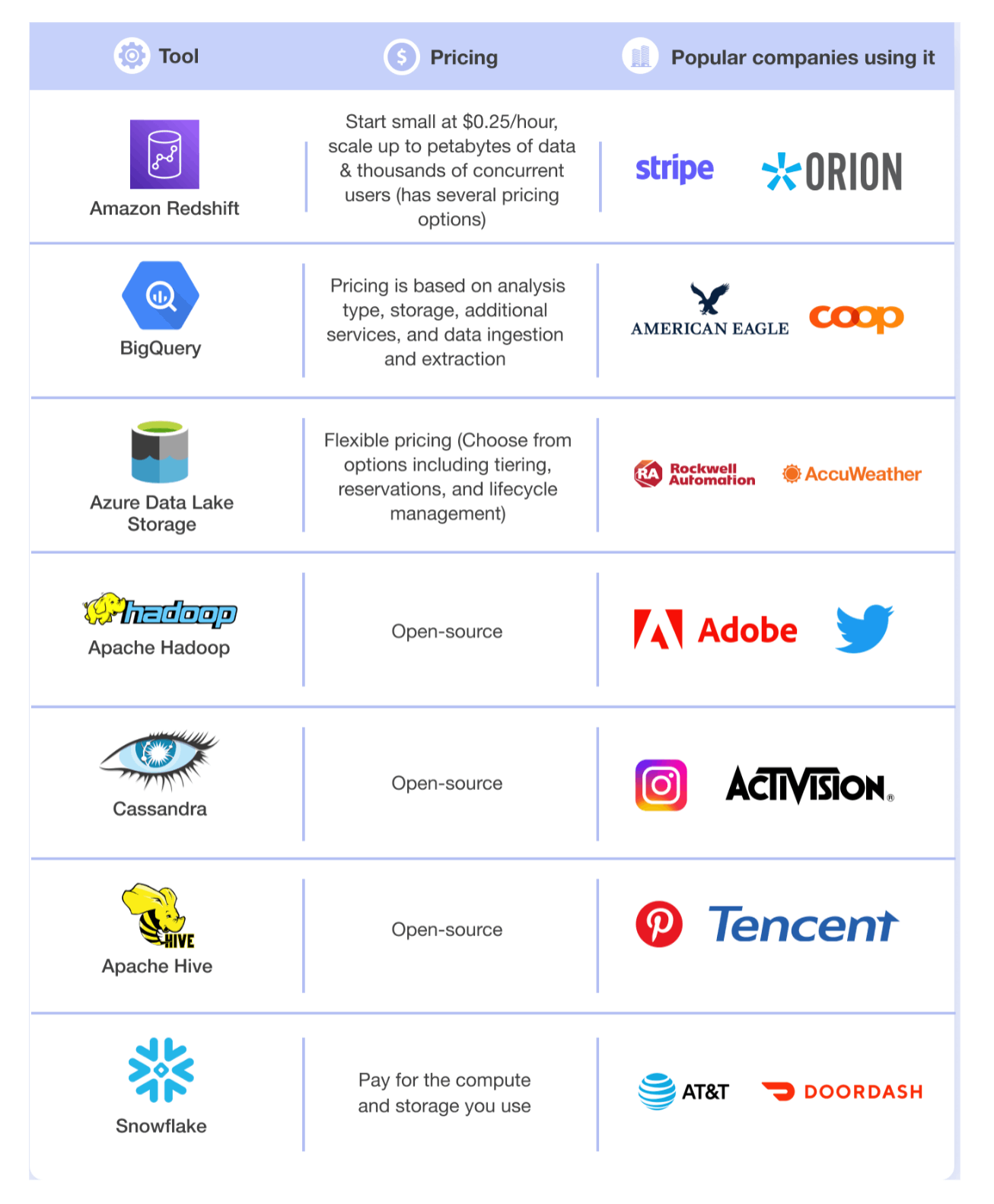

12. Amazon Redshift

Amazon Redshift is a cloud-based data warehousing service that enables users to run complex analytical queries on vast amounts of data. It facilitates secure access and integration of data from various sources, allowing organizations to derive business insights efficiently while minimizing data movement.

13. BigQuery

BigQuery is Google Cloud's fully managed, serverless data warehouse that consolidates siloed data for comprehensive analysis. It enables real-time decision-making and streamlines business reporting by providing insights from all business data in one accessible location.

14. Azure Data Lake Storage

Azure Data Lake Storage is a secure and scalable data lake optimized for enterprise big data analytics workloads. It allows for high-performance analytics without the need for prior data transformation or copying, leveraging the cost-effective infrastructure of Azure Blob Storage.

15. Apache Hadoop

Apache Hadoop is an open-source framework that facilitates the storage and processing of big data across distributed computing environments. Its core components include the Hadoop Distributed File System (HDFS) for storage and the MapReduce programming model for efficient data processing, making it a popular choice for scalable and fault-tolerant big data applications.

16. Cassandra

Apache Cassandra is a highly scalable NoSQL database designed to handle massive amounts of data with low latency. Its support for multi-data center replication ensures fault tolerance, while its linear scalability allows read and write throughput to increase seamlessly as more nodes are added.

17. Apache Hive

Apache Hive is a data warehouse infrastructure that allows for large-scale analytics and ad hoc querying of big data. With features like the Hive Metastore (HMS) for centralized metadata management, it integrates well with various open-source tools, enabling informed decision-making based on extensive datasets.

18. Snowflake

Snowflake is a cloud-based data warehousing platform that offers scalable storage and efficient management of large datasets. By separating compute and storage resources, it optimizes performance and supports various workloads, streamlining data engineering processes like ingestion, transformation, and analysis for deeper insights.

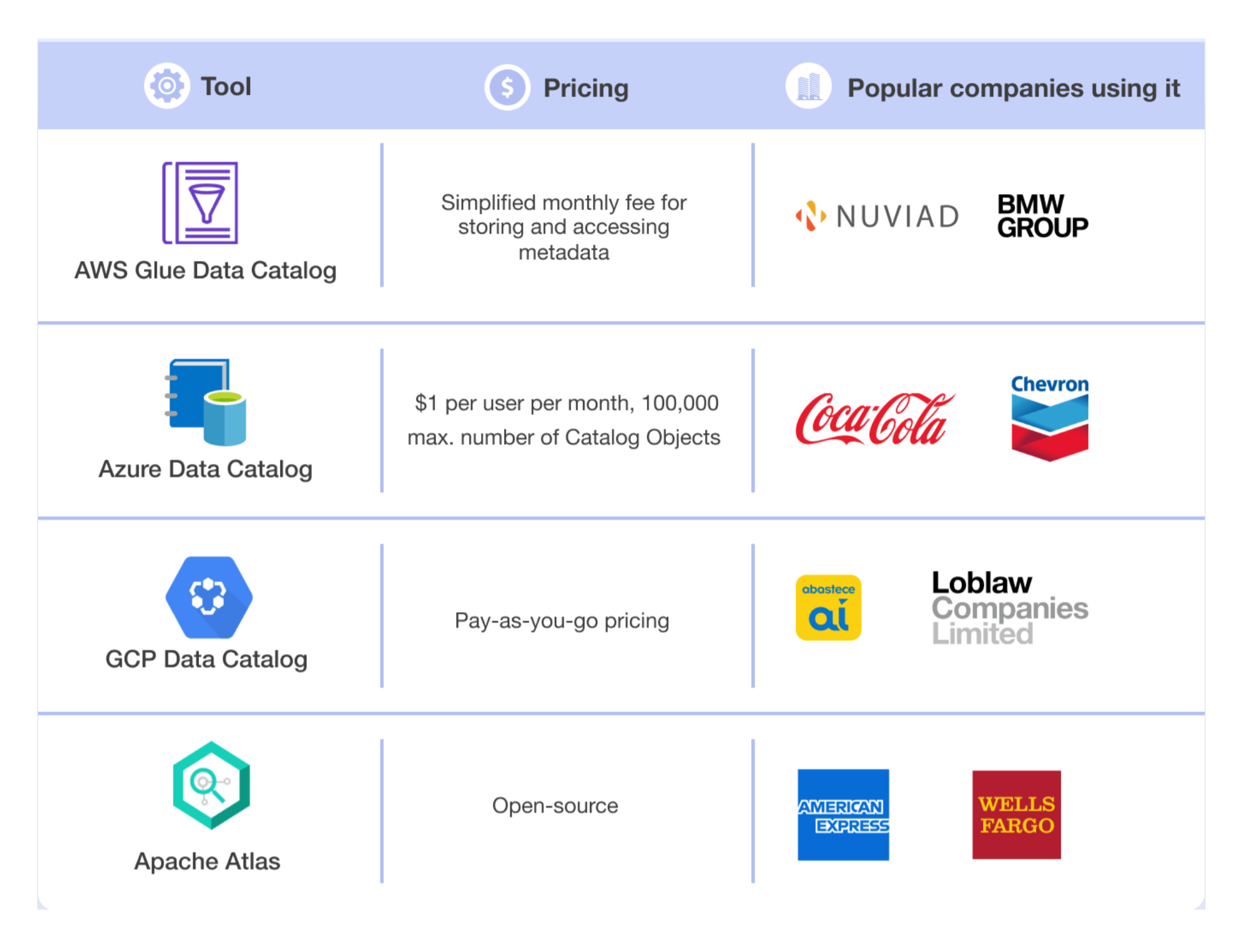

19. AWS Glue Data Catalog

The AWS Glue Data Catalog is a fully managed metadata repository that organizes and stores metadata for AWS Glue ETL jobs and other analytics services within the AWS ecosystem. It serves as a centralized hub for information about data assets, including tables, databases, and schemas, facilitating efficient data discovery and management.

20. Azure Data Catalog

Azure Data Catalog is an enterprise-wide metadata catalog from Microsoft Azure that simplifies data asset discovery for users across various roles, from analysts to data scientists. This fully managed service allows users to register, enrich, and understand data, providing a centralized platform for managing metadata about data assets.

21. GCP Data Catalog

Google Cloud's Data Catalog is a scalable, fully managed metadata management service integrated with Dataplex. It enables organizations to discover, manage, and understand their data across Google Cloud quickly, enhancing data management efficiency and supporting data-driven decision-making.

22. Apache Atlas

Apache Atlas is a scalable metadata management and governance framework that helps organizations classify, manage, and govern their data assets on Hadoop clusters. It facilitates collaboration across the enterprise data ecosystem, ensuring effective data governance.

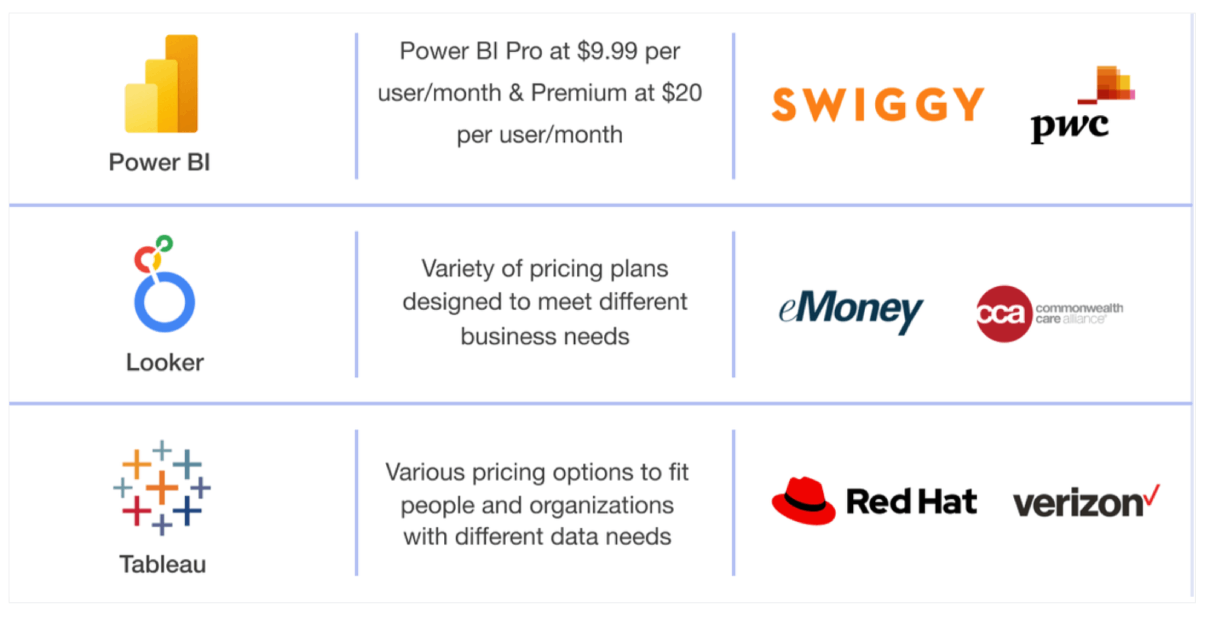

23. Power BI

Microsoft Power BI is an end-to-end business intelligence platform that connects to various data sources to visualize information and uncover insights. With AI-powered features like natural language queries, it enables users to obtain quick answers to business questions and share reports collaboratively.

24. Looker

Looker is a cloud-based business intelligence and analytics platform acquired by Google Cloud. It utilizes SQL-based analytics to display dimensions and aggregates while allowing users to create visualizations for effective communication of insights among teams.

25. Tableau

Tableau is a leading business intelligence and data visualization tool that enables users to create interactive dashboards and reports. Its intuitive drag-and-drop interface makes it easy to connect to various data sources and generate insightful visualizations, helping users uncover insights quickly.

When selecting tools, it's essential to choose those that not only enhance your business or data solutions but are also cost-effective and aligned with future growth. Talk to our experts for personalized advice on the best data engineering tools and services tailored to your unique requirements.